ChatGPT became one of the most talked about, if not the most talked about, technologies by the end of 2022 due to the amazing set of capabilities it brought as its features. ChatGPT optimizing language models for dialogue generation and offering a human-like conversational experience became some of the hottest topics of the IT industry.

Soon, the popularity of the technology-dominated top charts in the AI chatbot segment of the IT industry, and there are several statistics proving that. For instance, a report by Exploding Topics shows ChatGPT has over 100 million users at the moment and within just 5 days of its release, the technology had gathered over 1 million users. At the moment of writing this report, the language optimization capabilities of ChatGPT assist over 15% of Americans.

ChatGPT optimizing language models for dialogue has several phases

ChatGPT is trained to respond in a conversational way. It can understand queries well, accept or reject prompts, follow up to resume conversations, and can even respond with a touch of emotions. Initially, OpenAI experts released ChatGPT as a sibling model of InstructGPT.

InstructGPT is trained to provide tailored and detailed responses for various domains which separate it from the ChatGPT architecture as ChatGPT offers versatility in its responses and understanding of prompts.

“We’ve trained a model called ChatGPT which interacts in a conversational way. The dialogue format makes it possible for ChatGPT to answer follow-up questions, admit its mistakes, challenge incorrect premises, and reject inappropriate requests. ChatGPT is a sibling model to InstructGPT, which is trained to follow instructions in a prompt and provide a detailed response.”

- ChatGPT Author

ChatGPT optimizing language models that were used for training

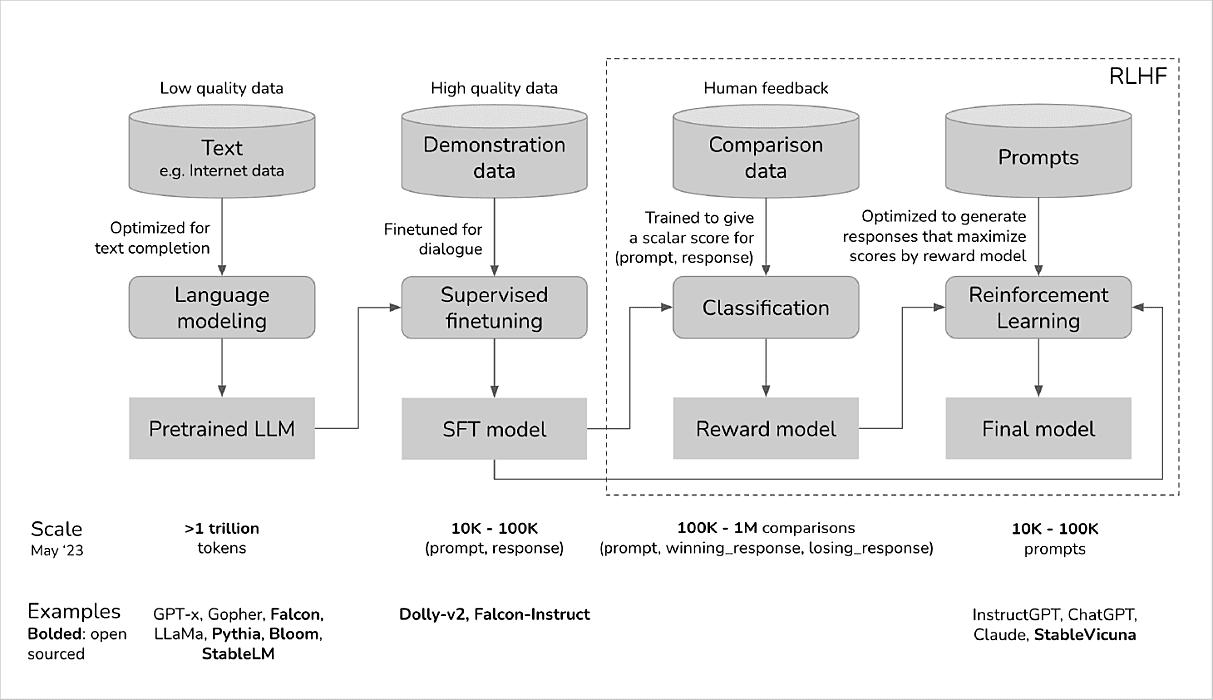

To train ChatGPT and GPT 3.5 architectures, an Azure AI infrastructure was used. ChatGPT authors used Reinforcement Learning from Human Feedback (RLHF) method to train the AI model for an improved and more realistic language model optimization. Now let’s talk about the series of models that have contributed to making OpenAI’s ChatGPT and its several versions smarter.

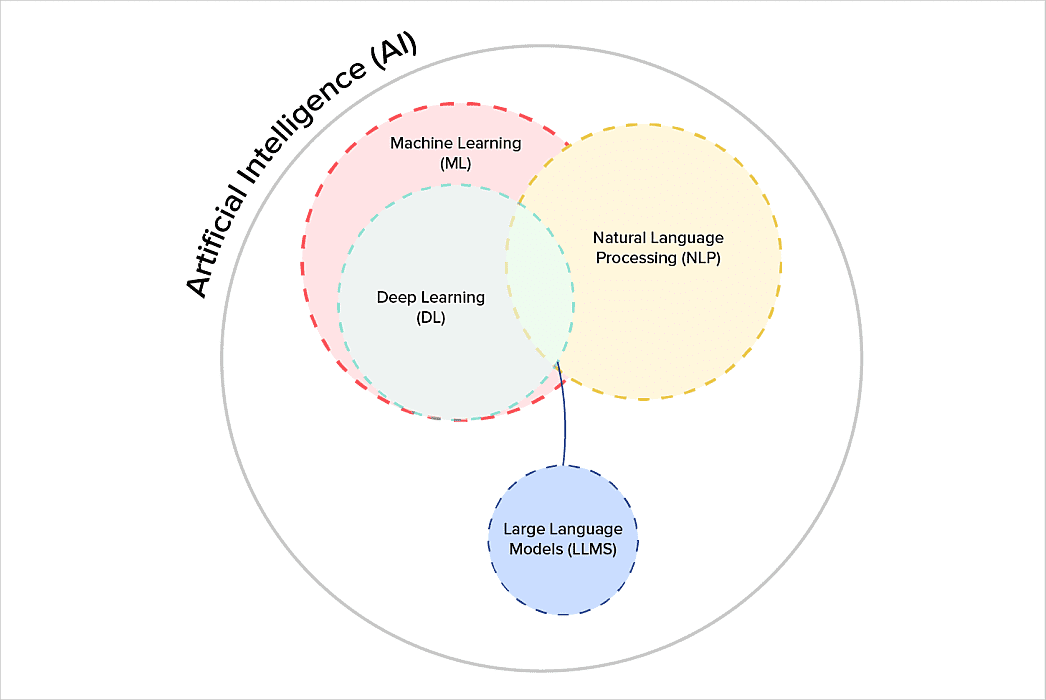

1. Large Language Models (LLMs)

Large language models are known for the massive size of text datasets that they keep and their ability to find relationships between these stored words. LLMs get smarter and more capable as the datasets used for inputs grow in quantity.

What are Neural Networks?

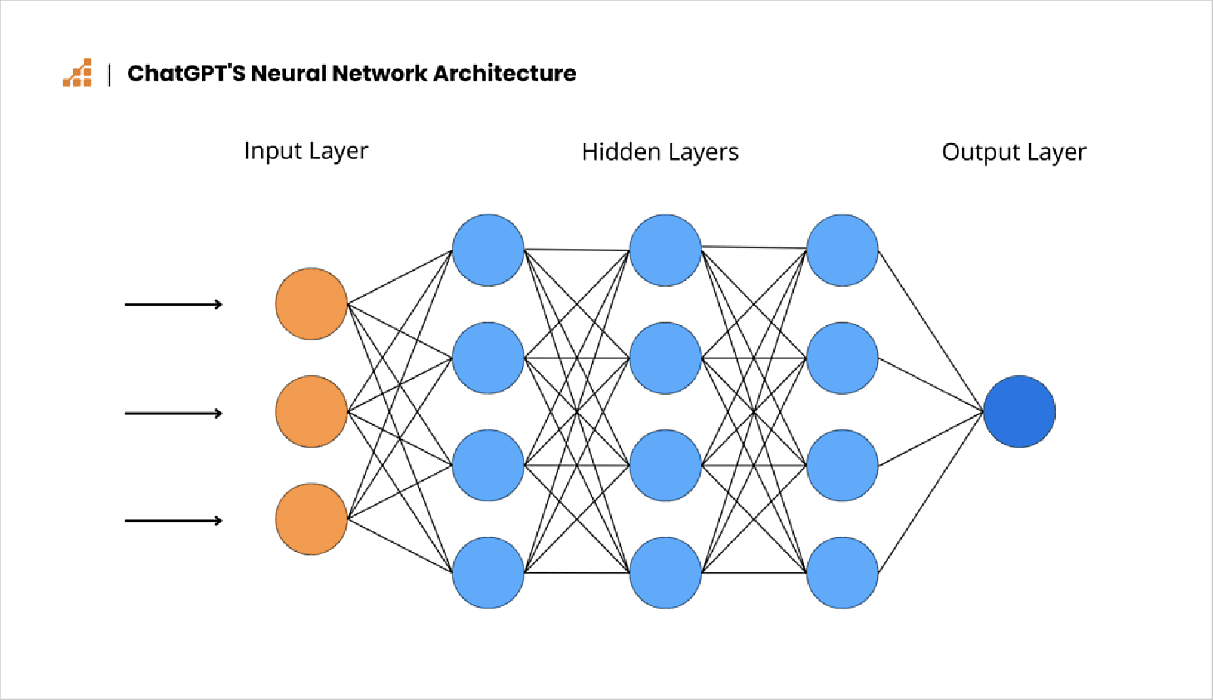

To understand better, it is important to be clear about what neural networks are. A type of machine learning model, a neural network is a replica of the network of neurons in human brains. Each neuron in the network is capable of calculating an output on the basis of the input it receives.

Now, based on the connection of each neuron with the other neuron in a network, the power of the neural network is defined. These connections are also assigned numerical weights. Once an output has to be generated, depending on this numerical weight, the output of a neuron is considered to be the input for other following neurons.

Also, in any language models like ChatGPT where neuron networks are used, inputs are first converted into numerical forms and then fed to the database to generate the output neurons. In Large Language Models like ChatGPT, there are often millions of neurons with billions of connections between them. Each connected neuron in these networks has connections assigned their own weights which help the ChatGPT model generate outputs faster.

But the question is who assigns these weights to neuron connections for language optimization?

Well, ChatGPT optimizing language models like LLMs do that themselves during the training process. The training process of a model is fed a massive amount of resources that it uses as a reference. Depending on the size of the project a model is being fed for, this data could sometimes be worth millions of dollars in terms of costs. Reviewing this big amount of data, a model finds combinations of texts on its own.

To train language models for dialogues, programmers prepare algorithms, rules, and the architecture of a model. These algorithms then AI prepare a model itself to process the data. Human programmers build algorithms but they do not interfere in processes such as assigning weights to neuron networks, optimizing language models, etc.

Additionally, during the training process of language models developed by OpenAI, the system is provided with feedback on the output to help it produce better results. There are trial and error stages too, plenty of them, but as it gathers big data and gets more computational power, the language model generates results that are higher in quality.

2. Supervised Fine Tuning (SFT) Model

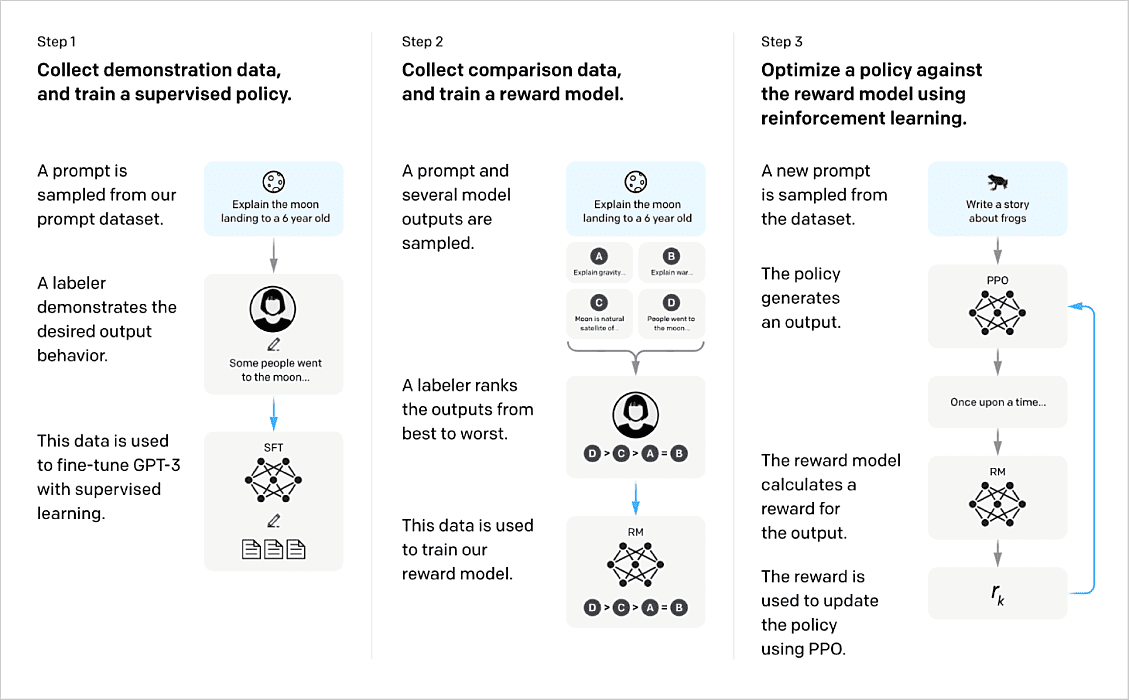

ChatGPT’s optimization for dialogue has several models involved in the entire process and each model helps the product improve its efficiency, accuracy, and quality of responses. Chatbot development companies like OpenAI Supervised the Fine Tuning model as a part of their RLHF deployment strategy.

To fine-tune the GPT-3 model, OpenAI first hired 40 contractors who created a dataset using the supervised training strategy to improve the quality of ChatGPT’s optimization for dialogue generation. In this dataset, each input was assigned an output to teach the model to respond. Open API was the source from where these inputs or prompts, as you know it, were collected.

Labelers that were hired prepared responses for these inputs to give the model a sample of responses. This SFT model was used to create a more advanced model called GPT- 3.5 which at the moment free users of ChatGPT can access.

As per a report by Towards Data Science, the process of ChatGPT optimizing language models for dialogue also included creating sample inputs based on different types of prompts. Here’s an example-

- Few-shot prompts: These language model optimization prompts were chains of multiple queries and responses in single inputs. Often, these prompts were larger in size and trainers tried to teach models more complex sets of queries by using these prompts.

- Plain prompts: Any simple prompt that is used to ask ChatGPT a question is a plain prompt.

- User-based prompts: As the name suggests, these prompts are quite specific. Users specify the task, the context, and the information that they are looking for from ChatGPT. This helps the language model developed by OpenAI understand the query better and provide responses that are trimmed to meet users’ needs.

3. Reinforcement Learning from Human Feedback (RLHF)

Enforcing RLHF in Natural Language Processing (NLP) models of ChatGPT which helped the tool become more accurate and creative with time. This further image by Huyen Chip will help you understand where Reinforcement Learning actually takes place once an input is given to the ChatGPT model.

The reinforcement learning language model optimization stage can be directly applied to the input shared by the pre-trained LLM but involving the Supervised Fine Tuning (SFT) model in between ensures better performance and accuracy in response generated.

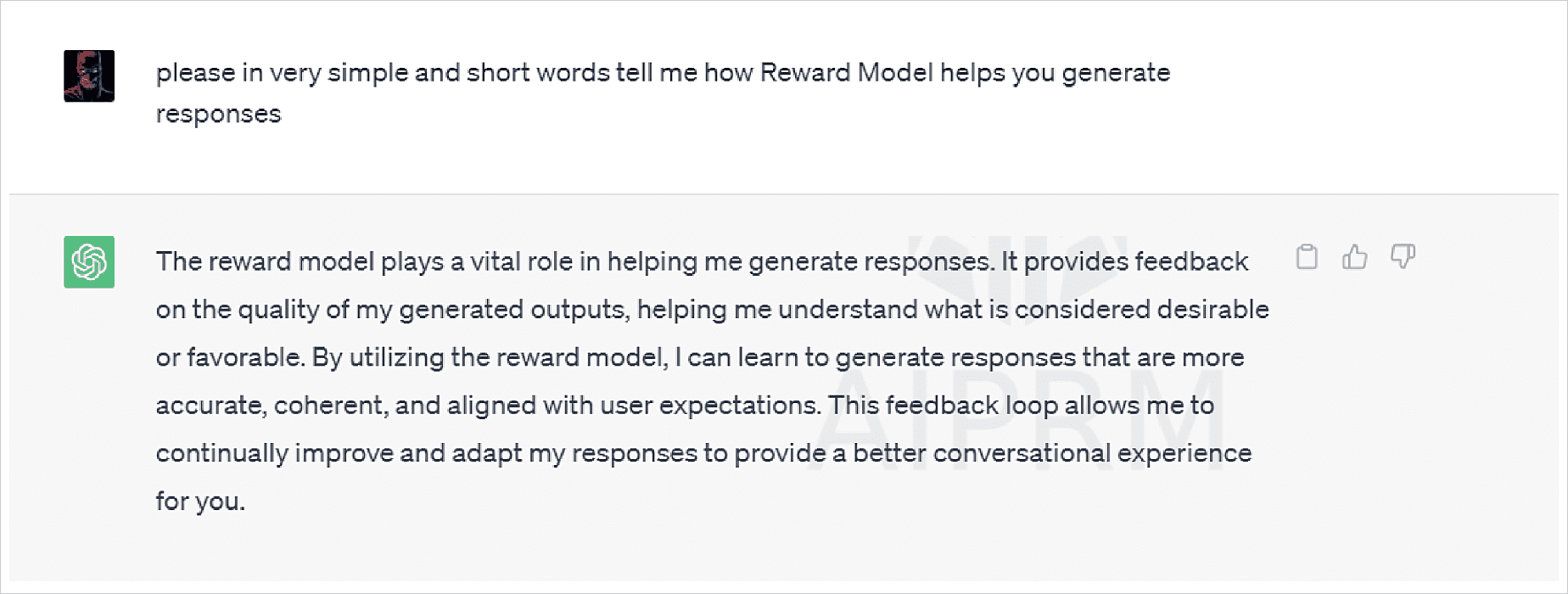

4. The Reward Model (RM) helps ChatGPT learn

The model that further contributes to improving the quality of the response- The Reward Model is based on the scoring strategy. The model is trained to score outputs generated by ChatGPT. Using trustworthy data and asking labels to decide on the better data can help the model learn which output is better in terms of quality.

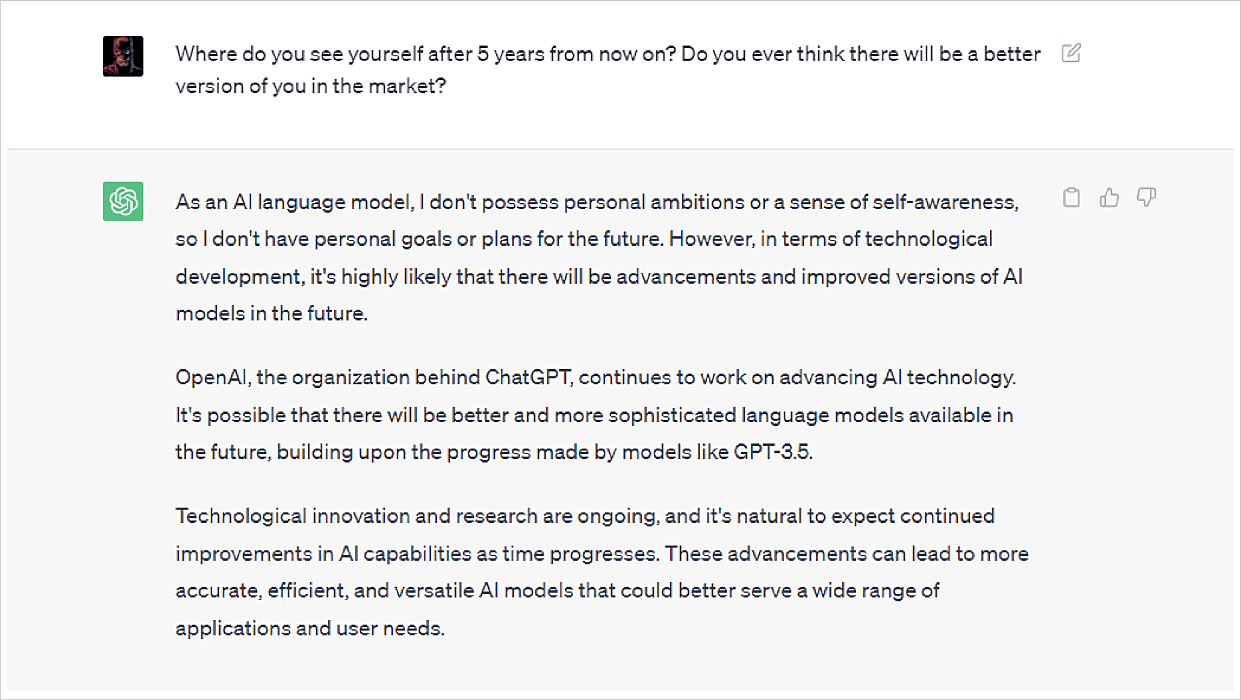

To put it in simple words, the Large Language Model of ChatGPT helps the AI find out the right sequence of texts to use in responses. And the Reward Model helps ChatGPT finalize what are most desirable responses for inputs that it receives from users. As you can see in the above image, I asked ChatGPT 3.5 to explain how the reward model helps it generate responses.

Want to know how to build an app using ChatGPT? Read here

The History of ChatGPT

ChatGPT has proven how Artificial Intelligence with the proper amount of data can assist human lives by making plenty of daily tasks easier. Its efficiency to provide accurate information and that too, while sounding like a human being is exciting for some while a shocker for others.

But let’s be honest, even though ChatGPT suddenly got the hype it deserves by the end of 2022, its journey started some years back when most people would not be able to answer what is ChatGPT. Now, to understand how ChatGPT is optimized for dialogues to assist humanity better, we got to look into how every previous version of ChatGPT evolved in parallel with tech advancements.

OpenAI was founded in 2015 by Sam Altman, Greg Brockman, Elon Musk, Ilya Suskever, Wojciech Zaremba, and John Schulman. The company initially released GPT 1 mthe odel in June 2018 with 117 million parameters. Later in February 2019, GPT 2 was introduced with 1.5 billion parameters but it became fully available in November 2019 to the public.

Later in June 2020, the revolutionary GPT 3 model was launched with a massive collection of 175 billion plus parameters. The model came up with a diverse range of advantages that included drafting emails, writing blogs, descriptions, etc.

There’s a GPT 3.5 as well that people are currently using for free. This version was launched in 2022 and ended up attracting lots of attention. Paid users of OpenAI’s ChatGPT are accessing its most advanced version at the moment of writing this blog - ChatGPT 4.

What is GPT-4 model?

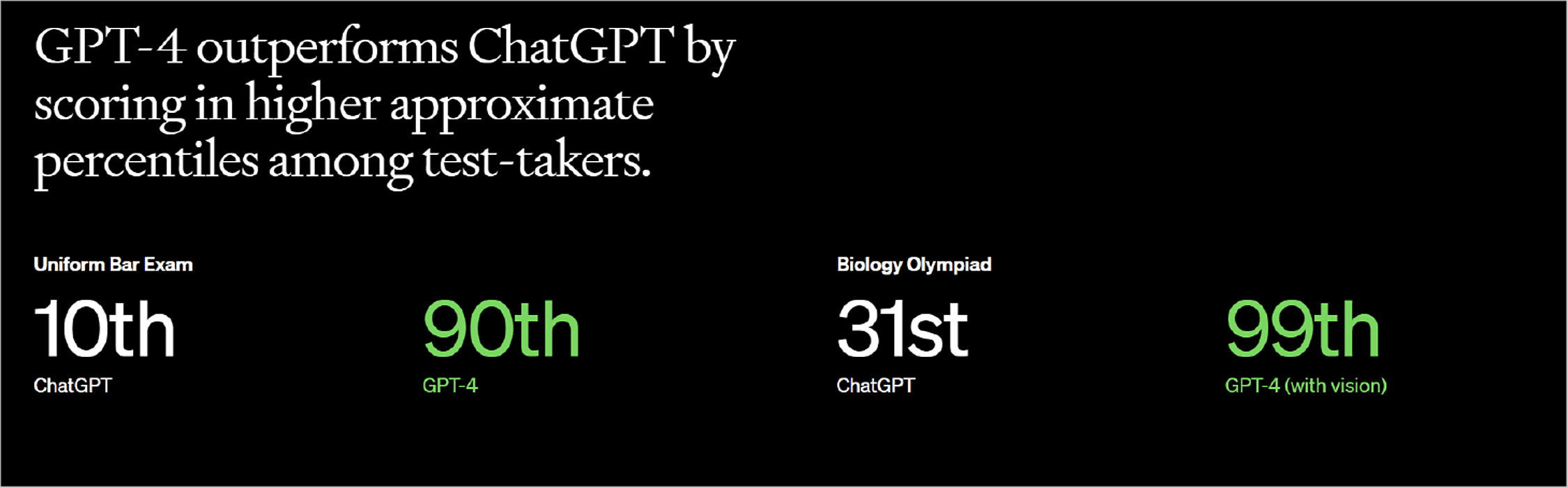

The latest version in the series, GPT-4 is much more advanced than any of its predecessors thanks to its massive amount of training data.

However, the formation of GPT-4 is done by learning the data provided by GPT’s previous versions. GPT, GPT-2, and GPT-3 helped authors pave the way for a much-advanced language model that could respond more sophisticatedly and accurately. Even datasets in GPT-4 are more recent making it able to even provide the latest information. In GPT 3.5, the datasets end in 2021 beyond which, it is useless for factual information.

“We spent 6 months making GPT-4 safer and more aligned. GPT-4 is 82% less likely to respond to requests for disallowed content and 40% more likely to produce factual responses than GPT-3.5 on our internal evaluations.”

- ChatGPT Authors

Build high-quality Excel spreadsheets using ChatGPT! Click here to know how

Wrapping up

To conclude, ChatGPT has evolved with its every version to offer better outputs to its users and that is one of the reasons why its hype in the market is justified. Compared to ChatGPT alternatives such as BardAI, ChatGPT still stands on the top in terms of offering well-optimized, detailed, and personalized responses.

ChatGPT for business owners, writers, or anyone else that uses it has been trained through sophisticated language training techniques like the Reward Model, RLHF, and SFT among others to improve its accuracy. And these sophisticated training methods helped the technology attract over 100 million users within just a few days of its release in the market. Now, there are several assistive tools available as well that help ChatGPT users come up with better-quality prompts to ask questions.

Attempting to understand the process of ChatGPT optimizing language models for dialogues has given us insight into OpenAI’s journey to build a tool that is helpful for humanity. The technology is also a perfect example of how Artificial Intelligence is capable of co-existing with people while making their lives easier.

Frequently Asked Questions

-

How does ChatGPT work?

ChatGPT leverages language models and use its neural networks to understand inputs and assign outputs to them. Whenever it is given a prompt, the AI uses its database to find the most suitable combinations of words to answer the question.

-

What is ChatGPT used for?

-

Can we use ChatGPT for free?

-

How is ChatGPT different from other chatbots?

-

Is ChatGPT available on Android?

By Sakshi Kaushik

Content Writer (B2B Editorial)

A passionate writer and tech lover, she strives to share her expertise with mobile app developers and fellow tech enthusiasts. During her moments away from the keyboard, she relishes delving into thriller narratives, immersing herself in diverse realms.